The Hidden Costs of “Free” AI Tools

Reading about costly implementations, elaborate policies, and other risks of buying in to a particular LLM, you might be tempted to have staff rely on free accounts. After all, it gives them access to a lot of the benefits, and you don’t get locked in to a single tool.

For mission-driven organizations, “free” AI tools are rarely truly free. The bill usually arrives later in lost control over data, sudden product changes, staff time you never budgeted, equity gaps you did not intend to create, or compliance and reputational risks that land on your desk all at once.

The good news is that most of these costs are predictable. With a thoughtful intake process, you can surface them before adoption and mitigate them.

This post reviews the hidden costs of free AI tools for mission-driven organizations, along with concrete ways to catch them early.

1. Data exposure and loss of control

“Free” often means your data is part of the bargain

Free AI tools frequently rely on broad rights to inputs, outputs, and usage metadata. Even when a vendor promises not to train on your data, those promises may depend on defaults that can change, features that can be added, or terms that can be updated unilaterally.

The risk here is not just privacy in the narrow sense. It is loss of control over how your data is used, retained, combined, or repurposed over time including logs and derivative artifacts you may never see.

What to do:

Check the terms of service. Many free AI tools, like ChatGPT, let even free members limit their data reuse. Because without an account, settings revert to whatever the default is that day, check the setting before each use. On ChatGPT, hit the question mark in the top right, select “Settings,” and ensure “Improve the Model for everyone” is OFF.

2. Product instability and behavioral drift

When the tool changes underneath you

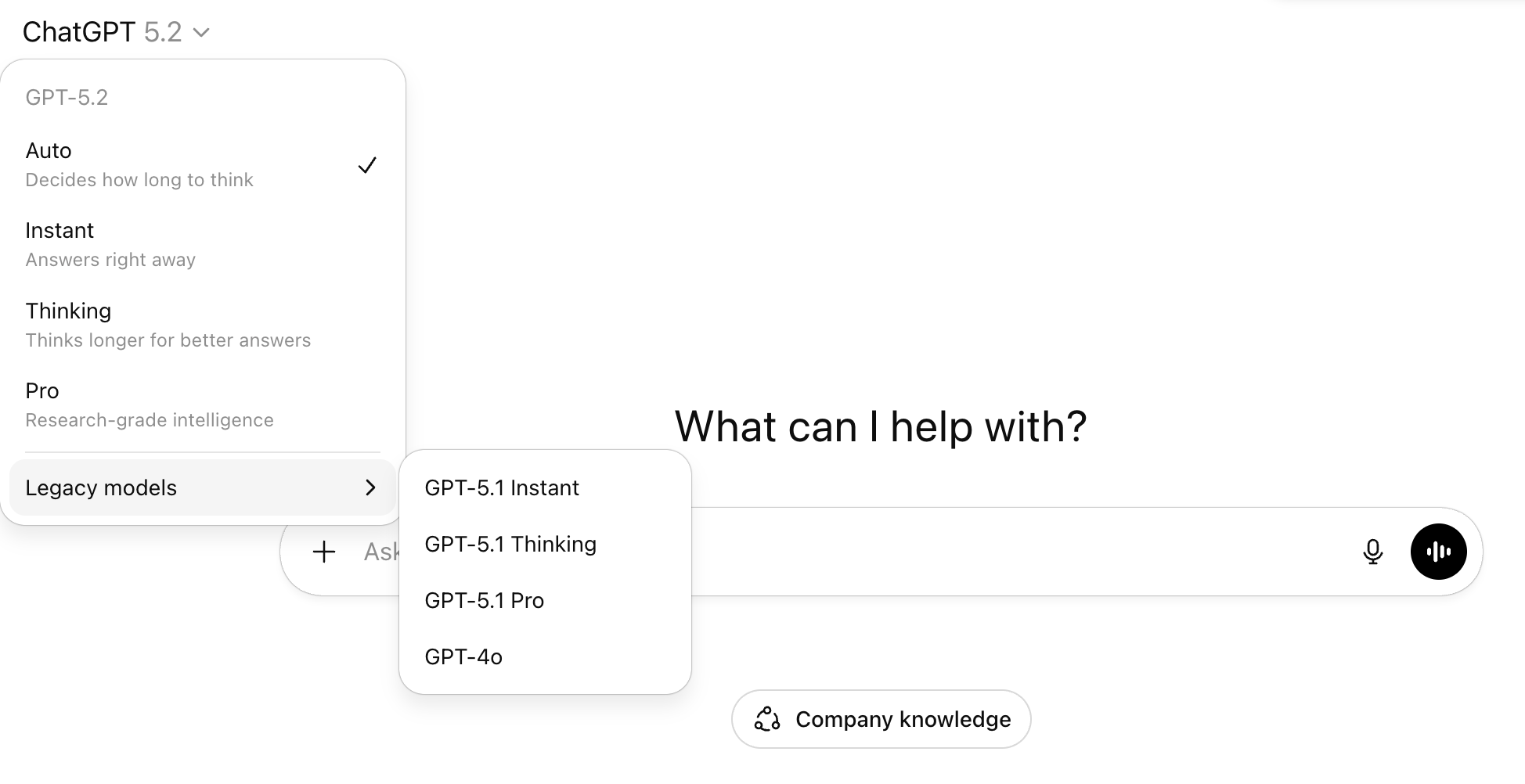

Tools change often. Models update, defaults switch, features appear, and safety settings move. These changes can materially alter how a system behaves, even if your workflow stays the same. A policy of “let people use whichever free tool they like” makes it difficult for IT or leadership to monitor changes across all of the options. Paid accounts on some major chatbots are the only way to control which model you’re using directly.

This is risk not hypothetical. The NEDA case illustrates how a shift from scripted responses to generative behavior can transform a low-risk tool into a mission-critical liability. The problem in that case was not “free models,” but unmanaged product change and the absence of guardrails to catch it.

What to do:

Designate someone, preferably someone with some software and security expertise, to keep any eye on tool updates and send out a lay-language digest of changes that impact free accounts that staff are using.

On a paid account, ChatGPT lets you select models, including older ones that may have more predictable output after a major update.

3. Unbudgeted staff time and skill load

“Free” tools still cost hours

AI tools save time only after people learn how to use them well. That learning includes giving context, iterating, checking outputs, and knowing when not to rely on the system. A free-for-all approach makes it easy to overlook critical training.

For example, drafting materials with an LLM saves writing time but still requires gathering evidence, answering follow-up questions, and verifying claims. Without training, critical quality assurance steps can easily get skipped, creating risk, or overlooked in planning once the rough draft goes around.

What to do:

Budget explicitly for training, both at implementation and for major tool updates. At Our AI Futures Lab, we offer low-cost trainings for mission-driven organizations that are tool-agnostic and applicable to free tools as well as paid ones.

4. Environmental and sustainability costs

Free models are sometimes older, less resource efficient

Free tiers may default to older, larger, or less efficient models than your use case requires. Energy, water, and hardware impacts increasingly surface in governance, donor, and public conversations—even when they do not dominate technical evaluations.

What to do:

Read up on sustainability in LLMs and communicate to staff about how to select smaller models.

Bottom line

Free tools can offer powerful computing at no direct dollar cost, but can also create hidden costs.

If you want scaffolding you can use this week, the Mission-First AI Starter Kit includes a vendor evaluation script, rubric, and policy templates designed for exactly these trade-offs. The free workbook we’ve made available at Our AI Futures Lab also offers a prompt library template you can use to start documenting and refining prompts and sharing best practices across your team, reducing some of the risks of “free” for all AI policies.

——

LLM disclosure: I had an old draft that some LLM had drafted based on my notes, but it was a mess. I put in ChatGPT 5.2 and asked:

”I have this draft that I'd like to improve. I think the list of "hidden costs" is a bit confused. For example, the first heading is "privacy" but much of the text describes the risk of models changing (also, NEDA was not using a free model.) Can you review this and redraft it? Before reading it, please come up with a list of hidden costs to mission-driven organizations of using free AI tools (doesn't have to be limited to LLMs). Then, review the draft and make sure that the ultimate draft includes a complete, mutually exclusive list of hidden costs of free AI tools for mission driven organizations:”

This created a much-too-long list that included risks of all LLMs, not focused on free ones. I edited extensively to focus the post more tightly on the hidden costs of letting folks use any free tool they like. I don’t mind—it’s easy for me to delete, and it helped me realize what scope I wanted that was more specific than the costs of one person using one free tool.